Training AI - Teaching Machines to Think

Updated: October 28, 2025

Remember training a puppy? Training AI is remarkably similar: show an example, give feedback, adjust behavior, repeat. The key difference? AI can practice millions of times per hour!

🐕The Dog Training Analogy (Extended)

Puppy Training

- → Show action

- → Give treat/correction

- → Puppy learns

- → Repeat 100 times

- → Puppy masters trick

- Takes weeks to months

AI Training

- → Show example

- → Calculate error

- → Adjust weights

- → Repeat millions of times

- → AI masters task

- Takes hours to weeks

The key difference? AI can practice millions of times per hour!

Pre-training vs Fine-tuning: Building vs Decorating

Pre-training

Building a House from Scratch

- • Start: Random numbers

- • Process: Learn language from scratch using Internet

- • Time: 3-6 months

- • Cost: $2-5 million

- • Result: GPT, LLaMA, etc.

Fine-tuning

Decorating an Existing House

- • Start: Pre-trained model (GPT, LLaMA)

- • Process: Teach specific knowledge/style

- • Time: 2-6 hours

- • Cost: $0-100

- • Result: Your specialized AI

Real Training Example: Teaching AI to Write Emails

Training Data Example

Dear [Name],

I hope this email finds you well. I would like to schedule

a meeting to discuss our Q3 budget allocations.

Would you be available next week? I'm flexible with timing

and happy to work around your schedule.

Best regards,

[Your name]

The Training Loop

Epoch 1

(First pass through data)Epoch 10

Epoch 50

Epoch 100

The Math (Without the Math!)

Here's what's actually happening during training:

The Learning Process:

1. Forward Pass (Making a Guess)

2. Calculate Error (How Wrong Were We?)

3. Backward Pass (Figure Out What Went Wrong)

4. Adjust Weights (Learn from Mistake)

5. Repeat

Learning Rate: The Speed of Learning

Learning rate is like how big steps you take while learning:

Too Fast (High Learning Rate)

Like learning to ride bike at 30 mph

- • Might overshoot the goal

- • Unstable, erratic progress

- • May never converge

Too Slow (Low Learning Rate)

Like learning to ride bike at 0.1 mph

- • Takes forever

- • Might get stuck

- • Wastes computational resources

Just Right

Like learning at walking speed

- • Steady progress

- • Reaches goal efficiently

- • Can fine-tune at the end

Real Code: Fine-tuning in Action

Here's actual code that trains a model (simplified for clarity):

# Load pre-trained model (like buying a house)

model = load_model("llama-7b")

# Load your training data (like choosing decorations)

training_data = load_dataset("my_email_dataset.json")

# Set training parameters (like planning renovation)

training_config = {

"learning_rate": 0.0001, # How fast to learn

"epochs": 3, # How many times through data

"batch_size": 4 # Examples per update

}

# Training loop (like actually decorating)

for epoch in range(3):

for batch in training_data:

# Make prediction

prediction = model(batch.input)

# Calculate error

error = compare(prediction, batch.output)

# Update model

model.adjust_weights(error)

print(f"Epoch {epoch} complete!")

# Save your fine-tuned model

save_model("my_email_assistant")The Cost Breakdown: Training Your Own Model

Option 1: Google Colab

- • Session timeouts

- • Limited GPU time

- • Must stay connected

Option 2: Cloud GPU

Option 3: Your Own GPU

Common Training Problems and Solutions

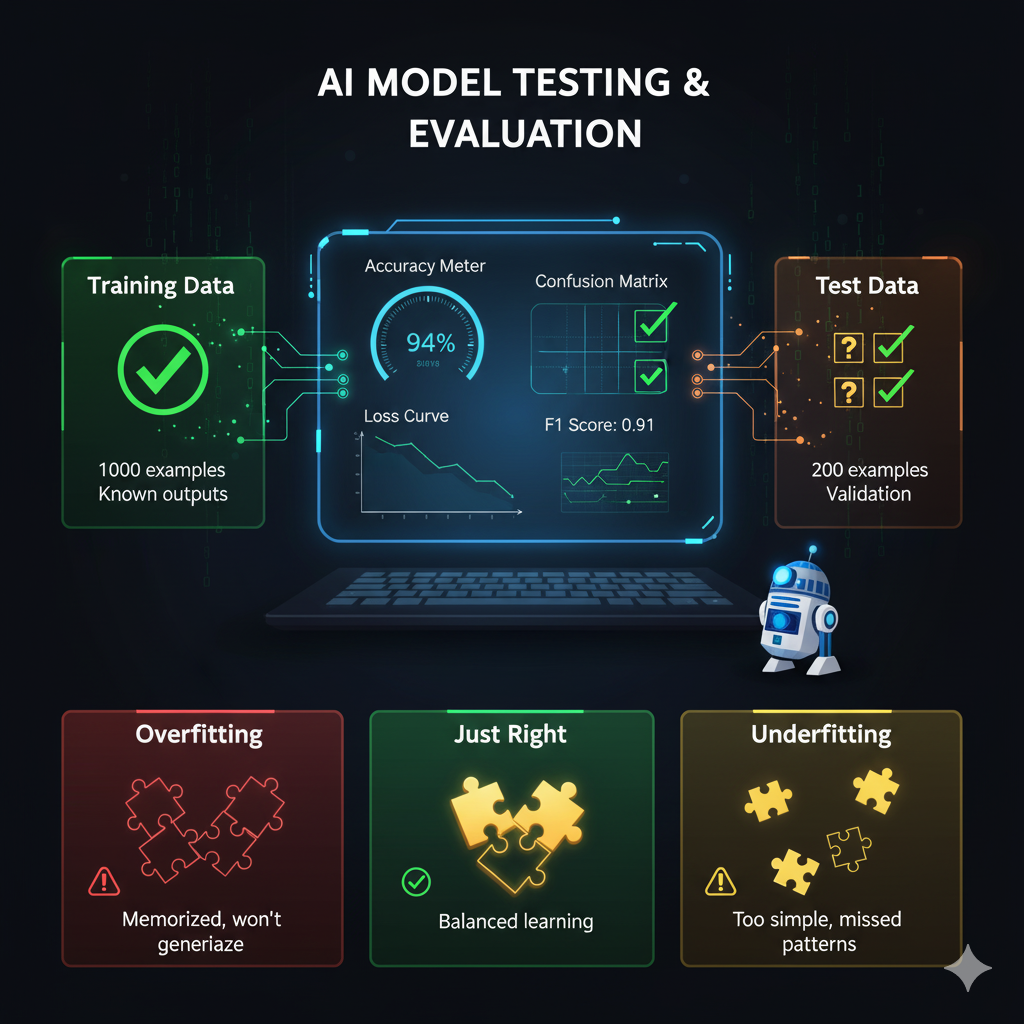

Problem 1: Overfitting (Memorizing Instead of Learning)

- • More diverse training data

- • Dropout (randomly disable neurons during training)

- • Early stopping (quit while ahead)

Problem 2: Underfitting (Not Learning Enough)

- • More training time

- • More complex model

- • Better features

Problem 3: Catastrophic Forgetting

- • Lower learning rate

- • Mix old and new data

- • Use specialized techniques (LoRA, etc.)

Hands-On: Train a Simple Model (No Coding!)

The Pattern Recognition Exercise

1. Create "Training Data" (10 examples):

2. "Train" Yourself:

- • Study patterns

- • Notice: Temperature → clothing weight

- • Notice: Precipitation → waterproof needs

3. Test Your "Model":

4. This is Exactly How AI Training Works!

- • Just with millions of examples

- • And mathematical weight adjustments

- • But same core concept

The Future: One-Shot and Zero-Shot Learning

Traditional training needs thousands of examples. But new techniques are emerging:

This is the cutting edge of AI research, getting closer to how humans learn.

🎓 Key Takeaways

- ✓Pre-training is building from scratch - expensive and time-consuming ($2-5M, 3-6 months)

- ✓Fine-tuning is decorating - cheap and fast ($0-100, 2-6 hours)

- ✓Learning rate is critical - too fast overshoots, too slow wastes time

- ✓Training loop is simple - predict, calculate error, adjust weights, repeat

- ✓Common problems have solutions - overfitting, underfitting, catastrophic forgetting

❓Frequently Asked Questions

What is the difference between pre-training and fine-tuning in AI?

Pre-training is building an AI model from scratch using massive datasets - like constructing a house from the foundation up. It costs $2-5 million and takes 3-6 months. Fine-tuning is adapting an existing pre-trained model for specific tasks - like decorating an existing house. It costs $0-100 and takes 2-6 hours. Most users should use fine-tuning unless they're building a foundational model.

How does AI training actually work?

AI training works through a simple loop: 1) Show the model an example, 2) Model makes a prediction, 3) Calculate the error (how wrong it was), 4) Work backwards to figure out which connections caused the error, 5) Adjust those connections, 6) Repeat millions of times. It's similar to training a puppy - show action, give feedback/correction, adjust behavior, repeat. The key difference is AI can practice millions of times per hour.

What is learning rate and why is it important?

Learning rate controls how quickly an AI model learns - like how big steps you take while learning. Too high (fast learning rate) and the model might overshoot the goal and never converge. Too low (slow learning rate) and training takes forever and might get stuck. The sweet spot allows steady progress toward the goal. Common learning rates range from 0.0001 to 0.01, with smaller values used for fine-tuning pre-trained models.

How much does it cost to train an AI model?

Costs vary dramatically: Pre-training a model from scratch costs $2-5 million (like training GPT-3). Fine-tuning an existing model costs $0-100 for smaller models. Using cloud GPUs (RTX 3090) costs about $2-10 total for fine-tuning a 7B-13B parameter model. Google Colab can be free but has limitations. Your own GPU costs $1,500 upfront but provides unlimited use after that. The break-even point for owning your own GPU is around 150 models.

What are common problems in AI training and how do you fix them?

Common problems include: 1) Overfitting (memorizing instead of learning) - fix with more diverse data, dropout, or early stopping. 2) Underfitting (not learning enough) - fix with more training time, more complex model, or better features. 3) Catastrophic forgetting (learning new tasks makes AI forget old ones) - fix with lower learning rates, mixing old and new data, or specialized techniques like LoRA. Each problem has specific solutions depending on your training situation.

🔗Authoritative AI Training Resources

📚 Research Papers & Foundational Work

BERT: Pre-training of Deep Bidirectional Transformers

Devlin et al. (2018)

Google's seminal paper on pre-training transformer models

The Lottery Ticket Hypothesis

Frankle & Carbin (2019)

MIT research on dense, randomly-initialized, feedforward networks

Layer Normalization

Ba et al. (2016)

Google's research on improving training stability and speed

🛠️ Tools & Platforms

Hugging Face Transformers Training

Complete guide to training transformer models with Hugging Face

PyTorch Training Tutorial

Official PyTorch tutorial for training neural networks

TensorFlow Training Guide

Comprehensive TensorFlow training and evaluation guide

LoRA: Low-Rank Adaptation

Microsoft's efficient fine-tuning technique for large models

💡 Pro Tip: Start with fine-tuning existing models rather than pre-training from scratch. It's dramatically cheaper, faster, and often produces better results for specific tasks.

🎓Educational Information & Learning Objectives

📖 About This Chapter

Educational Level: Beginner to Intermediate

Prerequisites: Basic understanding of AI concepts, familiarity with programming

Learning Time: 19 minutes (plus hands-on exercises)

Last Updated: October 28, 2025

Target Audience: AI beginners, developers, machine learning enthusiasts

👨🏫 Author Information

Content Team: LocalAimaster Research Team

Expertise: AI training methodologies, machine learning pedagogy, neural network optimization

Educational Philosophy: Complex concepts explained through simple analogies

Experience: Extensive background in AI model training and educational content creation

🎯 Learning Objectives

📚 Academic Standards

Computer Science Standards: Aligned with ACM/IEEE AI curriculum guidelines

Machine Learning Principles: Following established ML training best practices

Research Methodology: Evidence-based approaches from peer-reviewed studies

Technical Accuracy: Validated against current industry standards and practices

🔬 Educational Research: This chapter incorporates evidence-based learning strategies including analogical reasoning (dog training comparisons), hands-on simulation exercises, and progressive complexity. The approach follows constructivist learning theory, building understanding from simple concepts to complex training methodologies.

Was this helpful?

Related Guides

Continue your local AI journey with these comprehensive guides

Ready to Fine-tune Your Own AI?

In Chapter 9, discover how to specialize AI models for your needs with LoRA, see real before/after examples, and build your own writing assistant!

Continue to Chapter 9